Block Kriging

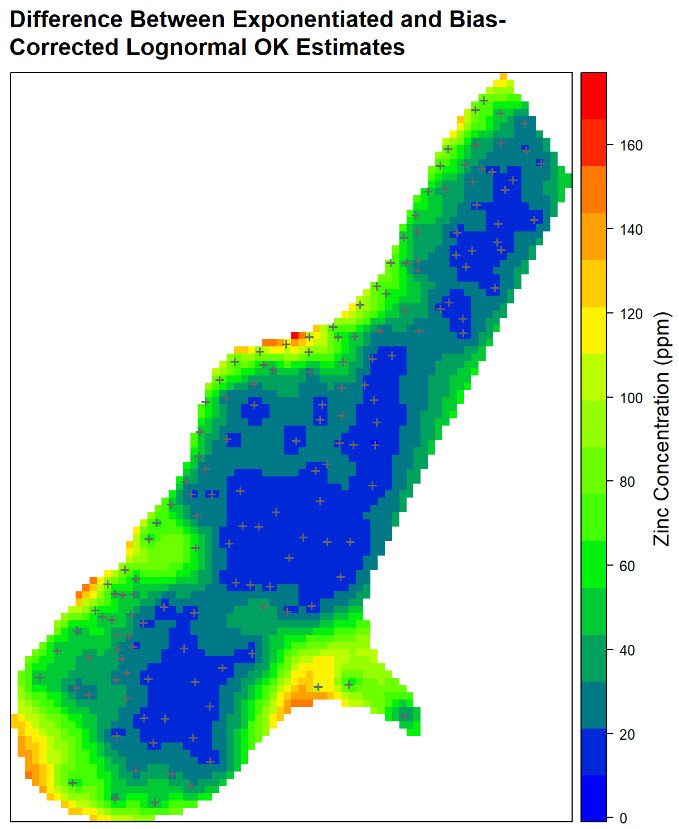

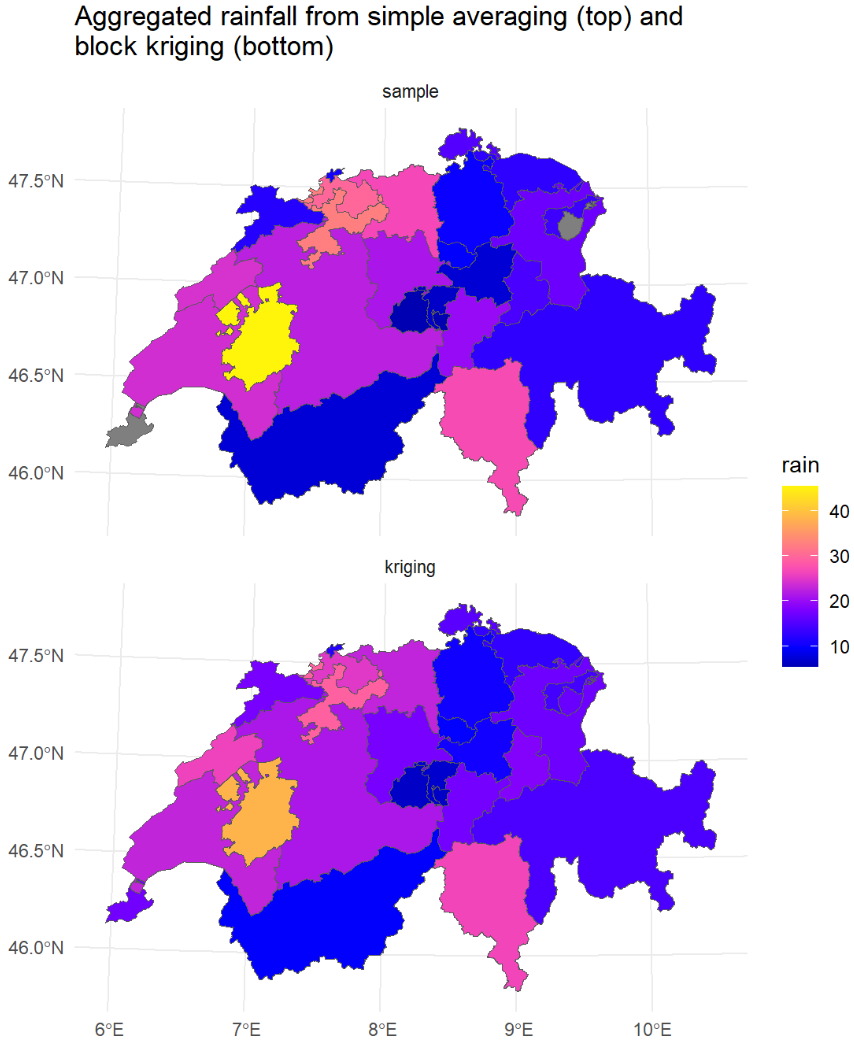

This post explores block kriging as a geostatistical method for estimating average values over defined areas, contrasting it with point kriging. Using daily rainfall measurements in Switzerland and the R gstat package, the analysis demonstrates how block kriging produces smoother maps and lower estimation variance compared to point kriging. While acknowledging the potential for obscuring true data variability, the post highlights block kriging’s utility when focusing on values over larger spatial supports, yielding less variable and more accurate areal mean predictions than simple averaging.